Used in: Dynamo DB

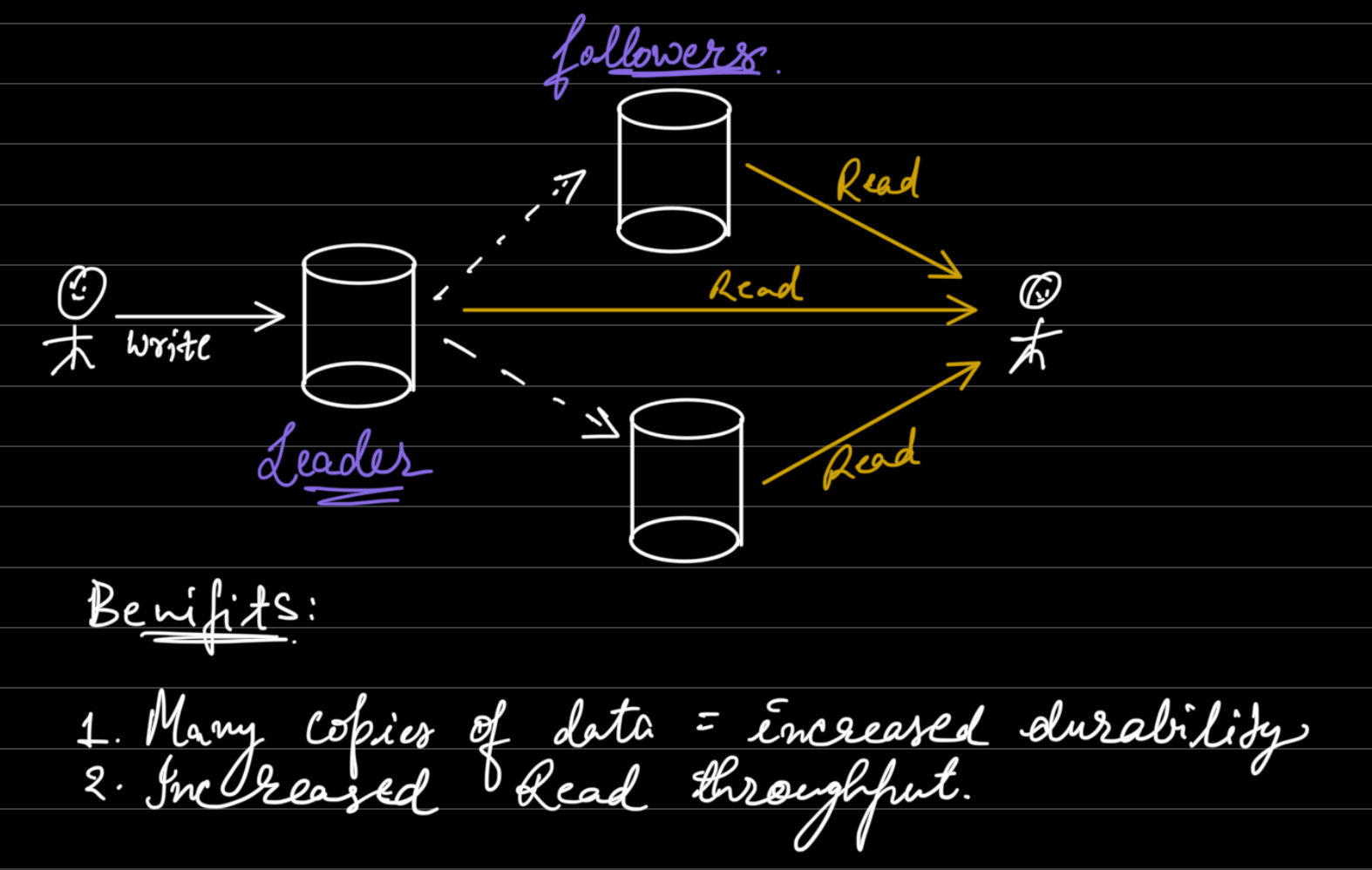

How single leader replication works?

- Write Operations:

- User writes to the leader node, which then processes and update it’s local storage.

- Replication to Followers:

- The leader asynchronously replicates the changes to the follower nodes.

- Each follower updates its data store to reflect the changes made by the leader.

- Read Operations:

- Read requests can be handled by either the leader or the followers.

- This helps in load balancing as followers can handle read traffic, reducing the load on the leader.

Use Cases

- E-commerce Websites: High read-to-write ratio where product details (reads) are accessed frequently, but updates (writes) are less frequent.

- Content Management Systems: Articles or posts are read by many users, but written by fewer users.

- Social Media Platforms: Posts and comments are frequently read by users, but writes are less frequent compared to reads.

Let’s look at Failure Scenarios:

1. Follower goes down.

- Leader sends replication logs to every follower.

- So, when a follower goes down and comes back up Leader can send the data from the last seen position in the follower’s replication log.

2. Leader goes down has multiple scenarios.

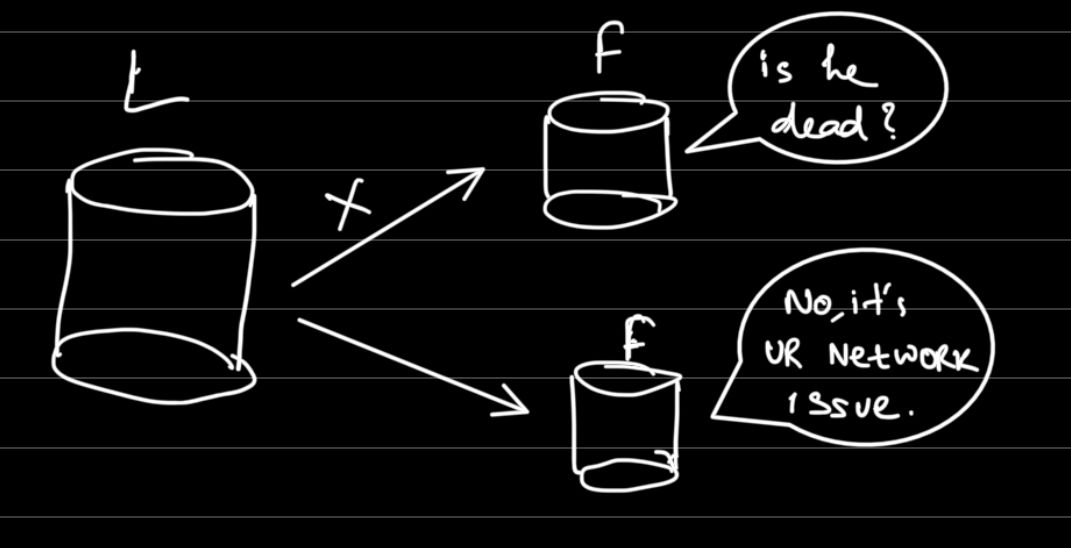

Scenario 1: Leader unable to contact with it’s followers.

Leader might not be able to communicate with some followers due to some network issue but since the calls are asynchronous so there’s no way to know if leader is alive and well. In asynchronous communication, there’s no immediate feedback mechanism to know if the leader is actually down or if it’s a network partition issue. This can cause uncertainty among the followers.

Scenario 2: Writes lost due to leader failure.

Last write: , was written to the replication log.

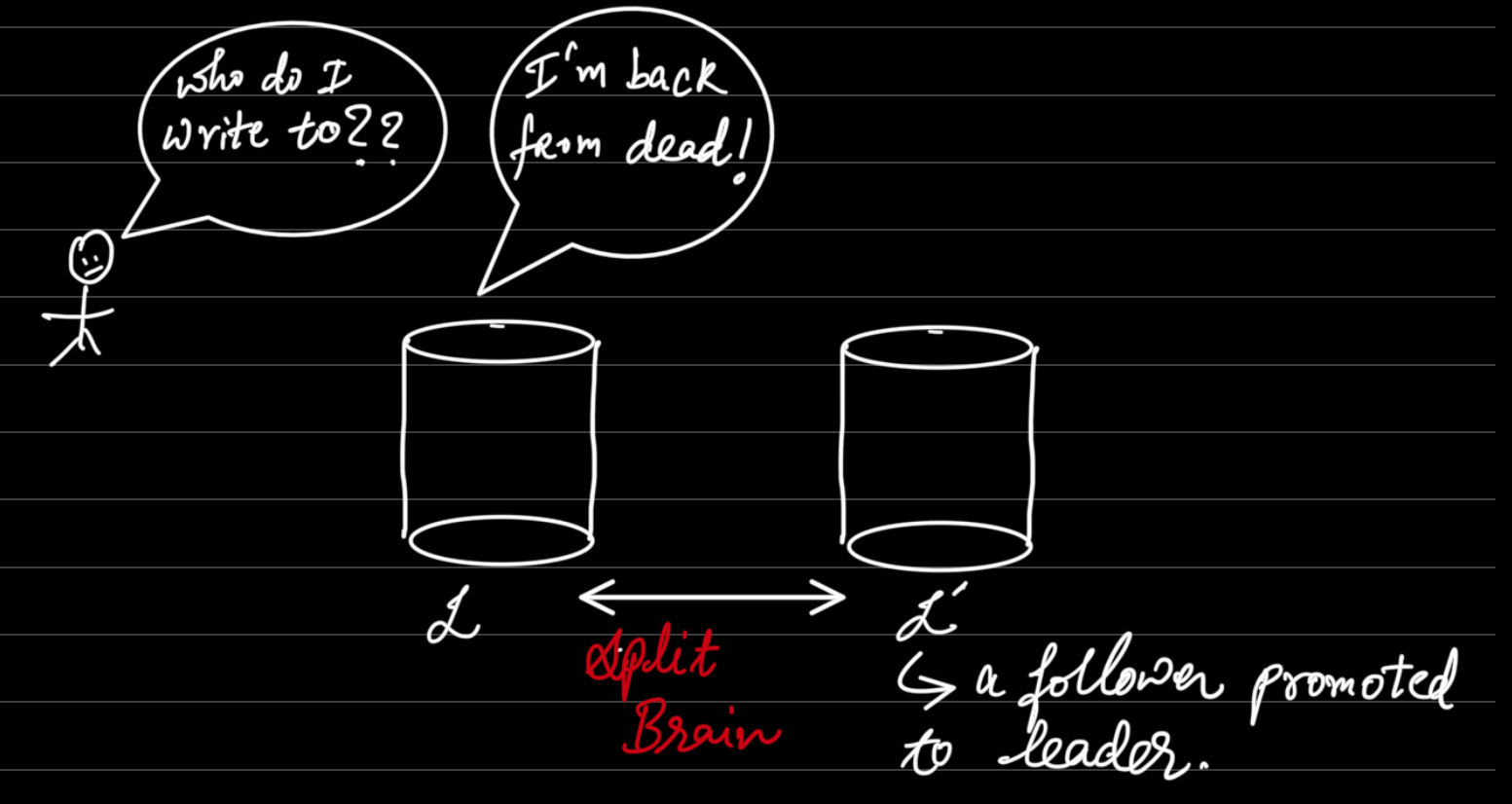

Scenario 3: Split brain

- Occurs when network partitions lead to multiple nodes believing that they’re leader.

- This can cause data inconsistencies and conflicts due to writes coming to followers from multiple leaders! Wtf.

To fix this we need Distributed Consensus!

Conclusion:

Simple, easy to modify the existing DB to add replicas. Easy to fix if nodes fail down. More read throughput.

But if we want more write thoughtput?? MultiLeader Replication - chaos.